This Summer, Brandeis University students Eben, Claire, R, and Nila, joined the Justice Brandeis Semester Program for a course on Voice, Web, and Mobile Apps. Without knowing each other before and all coming from different fields of study, they created “DocFinder” to give everyone a chance to find a provider near them by using a simple voice command.

Tell us about the course and how project DocFinder started.

We joined this summer course to be able to put our theoretical knowledge in computer science into practice. The class consisted of 19 students, which formed 5 groups in total. Each group chose one industry to focus on for their app. The outcomes included apps similar to a LinkedIn-type social media platform for students, a Craigslist for buying and selling, a voting and politics app, and a voice-interactive cooking app. We chose healthcare and built a web app called DocFinder, because we wanted to improve the way people access healthcare. As out-of-state students we’ve had some trouble finding a PCP ourselves. We also thought this type of app would have a lot of influence.

What kind of experience did you have with making your own apps before?

None of us had ever built our own app before. We all have studied computer science however, and at school we learned a lot about backend object-oriented languages like Java, Python and C. It was really useful to get a crash course on html and web development at the beginning of this course. The most challenging part was to try to learn and produce at the same time something that none of us had never done before, but we also felt like that’s where you learn the most. Overall, the process included relying a lot on Google and sites like Stackoverflow. Even GitHub was new to us, but it became a really helpful tool for this project. Thankfully all the technology and APIs used had really good and clear documentation that let us do this and develop our programming skills.

How long did it take to build DocFinder?

The course was divided into two parts. The spoken dialogue and web application courses started in early June and then the actual project part started at the beginning of July. The course included a lot of startup-like intensive working and independent working that everyone did on their own.

Tell us about the technical choices.

The application is currently hosted at their university’s servers, and the code and documentation is available on GitHub. The two main APIs used for building DocFinder were BetterDoctor API, that gave us access to provider data in the US. For speech recognition we used API.AI, now owned by Google, which is a free API that lets you build speech recognition bots that are even friendly in their responses. The neural network behind API.AI turned out to be really easy to work with and didn’t require a lot of coding.

The voice application is a big part of DocFinder. How did you build the speech recognition?

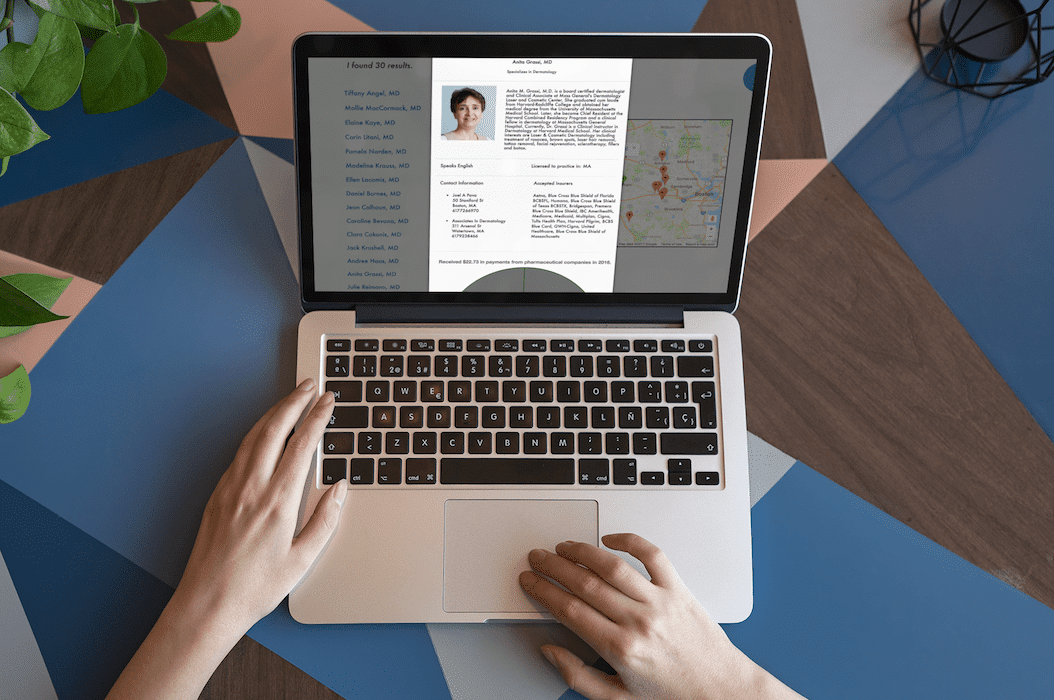

Voice commanding is an important aspect of accessibility — we didn’t even want to include a search bar to type your queries. We used API.AI to extract specified parameters from utterances. The process goes like this: your voice command forms a statement, that gets to a string object. From this certain parameters are extracted, such as the specialty of the provider, the insurance network and location. Then an API call to API.AI returns a JSON object of those extracted parameters. Then, the JSON response gets parsed and sent over to the other API, which, in our case was the BetterDoctor API, in order to get the list of doctors corresponding to your request.

Explain how you used JavaScript to do that.

On the website side we used primarily JavaScript and html. We receive a JSON object through the JavaScript and check for different entities and actions like search that the JSON object contains. Then, depending on what that actions is, different JavaScript methods are executed. When the search is being made, the JavaScript compiles a URL, based off of what the API.AI object returns, and then that sends it to BetterDoctor API. After getting it back we we would format all that using JavaScript, such as the results page and the doctor profile page.

In addition to BetterDoctor API for the provider data and API.AI for voice command bot, what other technologies did you use?

We decided to use Meteor as our server so we were able to deploy servers locally through our computer for development. We also added many packages to Meteor that allowed cookies and such things. – In the web browser version we also built an integration with the Google Maps API. As we already had been using the Google Geocoding API to turn a spoken address into coordinates to pass to BetterDoctor API, it was just one extra step to add on full maps integration. That way when you click on the doctor’s profile, it shows you the map. We wanted a feature where users can see more information about their own prescriptions or any over-the-counter drugs they may have. To do this we connected to Iodine to implement a drug information search bar in the user profiles. We also wanted to be able to show the users the amount of money the providers have received from pharmaceutical companies. That was made possible by implementing Open Payments Data, which shows payments from pharmaceutical companies to doctors. If you open any doctor’s profile and scroll to the bottom, the pie chart displays that data, telling the patient if the provider has received money to promote specific drugs.

What other features would you have wanted to add to DocFinder, had you had all the time in the world?

We had so many ideas! We would have wanted to get scheduling data, so that the patient would be able to make an appointment directly. We also considered adding reviews or our own reviewing system. We hope to continue the development of DocFinder. At least we want to move it to a public server, as now we’re hooked up with the University one. Next features would include improving speech recognition with location with the ability to say just “Near me” or continuing the search and making another query by saying something like, “Now, search for physicians.”

RESOURCES

- DocFinder Documentation on GitHub

- BetterDoctor API

- API.API

- ResponsiveVoice.js

- Meteor

- Coolers.co

- Google geocode API

- Iodine

- Open Payments Data

Congrats to the team! If you want to learn more, don’t hesitate to get in touch with these folks (see contact info below).

If you would like to share your software project, let us know. We have previously covered HealthCloud, a Master Thesis project on telemedicine with video features, and many others. Build your own app with our provider data by signing up for our API for free.

GET IN TOUCH WITH THE TEAM BEHIND DOCFINDER:

Claire Sun:

- Email: csun@brandeis.edu

- LinkedIn: https://www.linkedin.com/in/claire-sun/

- GitHub: https://github.com/csuncodes

Eben Holderness:

- Email: egh@brandeis.edu

- LinkedIn: https://www.linkedin.com/in/ebenholderness/

- GitHub: https://github.com/eholderness95

Nila Mandal:

- Email: nmandal@brandeis.edu

- LinkedIn: https://www.linkedin.com/in/nila-mandal-9b107013b/

- GitHub: https://github.com/nilamandal

R Matthews:

- Email: remimatthews@brandeis.edu

- LinkedIn: https://www.linkedin.com/in/rrmatthews/

- GitHub: https://github.com/rrmatthews

- Twitter: @rrmatthews